How I Use AI to Trade Like Williams Inference (20+ Years Later)

When I started at Avalon Research more than 20 years ago, markets felt different.

Desks had screens and data – but also things you rarely see now. Traders used turrets (multi-line phones with direct lines to floor brokers). Almost every station had stacks of print – newspapers, trade magazines, and niche newsletters.

My first must-read came from a small group called Williams Inference.

Long before we stared at feeds, their network clipped articles, circled odd facts, and mailed them in. The method was simple: read widely, notice anomalies, connect thin clues into a theme you can act on. Not secret data – pattern sense.

You didn’t wait for a glossy report. You built your own.

A single story about a new plant was noise. But when permits, supplier quotes, job postings, and longer lead times started to rhyme, that became signal. Jim Williams called it inferential thinking. Others later used the term “reduced-cue analysis.” Different labels, same habit: pay attention to the small stuff and let it add up.

That habit matters again – right now – because AI is shifting from labs to daily use.

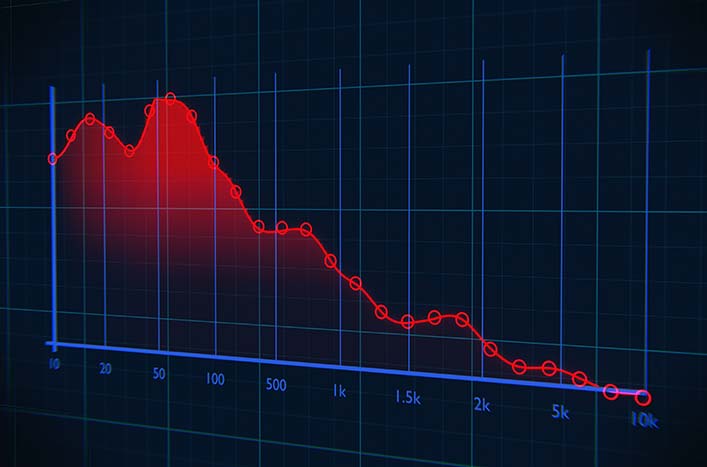

Phase one was training giant models. Phase two will run them everywhere. (Yes, the AI world calls that inference too.) Every answer an AI provides uses power, creates heat, and moves data. Those are physical needs, not marketing lines. They trigger real orders for real companies.

If you keep tallies like Williams did, you can watch the buildout take shape.

Teaching AI to “Think” like Williams Inference

Here’s what’s new. Teams are training AI to spot weak signals at scale – not to guess prices tick-by-tick, but to notice oddities, link them, and write a clear, testable claim: “Spending is about to shift here because of these cues.”

Here’s how it works (in plain English):

- Feed the model messy inputs: news blurbs, permit filings, supplier comments, shipping data, call snippets.

- The model flags what’s out of pattern: a spike in data center permits in one county; longer transformer lead times; a jump in 400G/800G optical orders; a cooling pilot that quietly expanded.

- It links the blips by place, vendor, product, and time. What took humans days with a highlighter takes minutes.

It drafts the first sentence an analyst can live with – “Liquid cooling is moving from pilot to rollout in these sites” – plus a short checklist: backlog rising, book-to-bill above 1, small margin lift, new field-service hiring. If those confirmations aren’t there, the thesis will wait.

That’s Williams Inference for the AI age: lots of weak cues, tight linking, one clean claim, and a few facts that can prove or kill it.

Why Inference Matters Now

AI “use” pushes money into four spend lines first:

- Power (conversion, protection, reliable supply)

- Cooling (from air to liquid systems at scale)

- Interconnects and speed between data centers (100G → 400G → 800G upgrades)

- On-site build/serve (install, commission, maintain)

You often see the turn in small places: a rushed substation permit, a fiber backlog note, a vendor adding a second shift for manifold assembly. These are thin cues – exactly the kind AI can sift across thousands of sources.

In short, we’re using AI inference to track the AI-inference buildout. When usage climbs, the same names repeat; the confirms show up, and orders follow.

AI is tireless at collecting and clustering. It reads what you can’t and remembers all of it. It also forces structure – who/where/when, what changed, what’s unproven.

But it will also spin a sharp story from a shallow pool if you let it.

![]()

YOUR ACTION PLAN

Humans add three guardrails… 1) Context: “That project was announced last year; this is just a permit step.” 2) Risk: “Single-customer exposure is high.” 3) Common sense and logic: “Even if the theme is right, this company’s balance sheet can’t handle delays.”

That’s why I treat AI-assisted inference as a theme engine, not a Magic 8-Ball. Use it to surface candidates – and to disqualify pretenders.

nonOWB

So where does our scan keep pointing? Consider…

- Using an AI-first, Williams-style read of the current cycle, the same cluster lights up again and again

- Power conversion and protection

- Liquid cooling moving from pilots to fleets

- 400G everywhere, with 800G entering production

- A handful of niche installers winning follow-on work

From that, we narrowed to seven small names that fit the confirmations.

Good investing,

Kristin

FUN FACT FRIDAY

While Deep Blue needed a room-sized supercomputer to barely beat Garry Kasparov in 1997, today’s top chess AI (Stockfish) runs on your smartphone and would crush every human champion in history – combined.

In fact, the gap is so huge that modern engines are rated over 3600 Elo, while the highest human ever (Magnus Carlsen at his peak) topped out around 2882. That’s like an adult playing chess against a toddler… and the toddler is the human!

More from Trade of the Day

Why Smart Traders Avoid These Stocks Like the Plague

Feb 19, 2026

Two Footwear Stocks Ready to Follow CROX Higher

Feb 18, 2026

Are the Banks Destroying Your Savings?

Feb 16, 2026